I’ve always thought it is cool to have a pet project. Creating something single-handedly lets you get an insight into problems that you won’t come across when you’re just one out of many people engaged in the project. One of such things is setting up proper operations and deployment process. Sure, for something small even manual deployment might be a good choice. But if it’s just for fun, why not take a chance to learn something? Let me tell you what I’ve learned while managing my own project in the terms of how to use AWS parameter store to manage secrets.

The Problem – Passing envs to ECS

When developing project we want to avoid storing any secrets in the code. One solution is to pass them as the environment variables. This way they only live in the memory, are immune to path traversal attacks, or remote file inclusion. The only way to retrieve them is to gain control over the process, ie. perform a successful remote code execution. But if this happens, it means that we’re FUBAR anyway and have bigger problems.

For example the config for the database connection for my project is following:

DATABASES = {

"default": {

"ENGINE": "django.db.backends.postgresql",

"NAME": env("POSTGRES_DB"),

"USER": env("POSTGRES_USER"),

"PASSWORD": env("POSTGRES_PASSWORD"),

"HOST": env("POSTGRES_HOST"),

"PORT": 5432,

}

}

The env(NAME) retrieves the variable called NAME from the memory of the server that is running my code. Docker compose is able to load them using a file, which lists them. The config is following:

services:

db:

image: postgres:12-alpine

env_file:

.env

ports:

- "5432:5432"

volumes:

- learn-web-dev-data:/var/lib/postgresql/data

web:

build:

dockerfile: docker/Dockerfile

context: .

command: python manage.py runserver 0.0.0.0:8000

env_file:

.env

And the .env can be like:

POSTGRES_DB=postgres POSTGRES_USER=postgres POSTGRES_PASSWORD=postgres POSTGRES_HOST=db SECRET_KEY=secretkey DEBUG=True

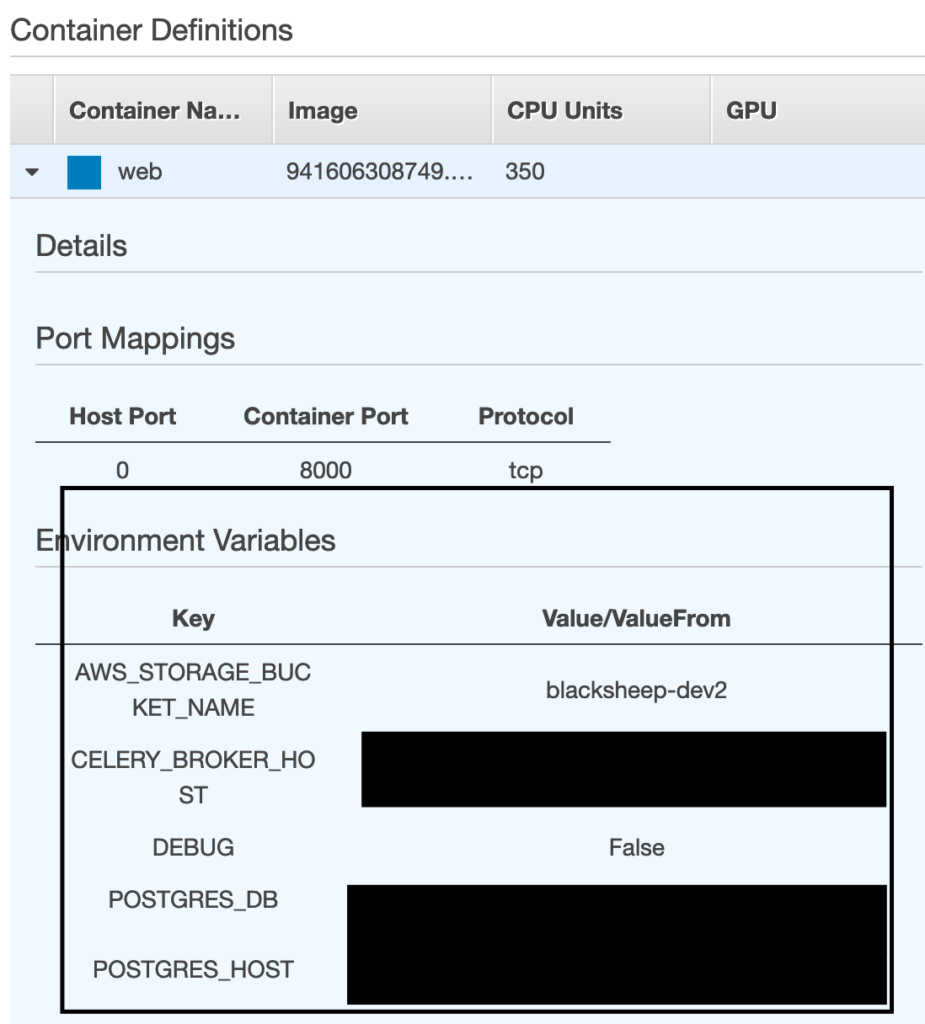

Of course we don’t want to use files in production for the reasons I’ve mentioned. Luckily when deploying docker containers with AWS managed service called ECS, we can pass them directly into the memory.

This is a big step forward. No secrets stored in files = no option to retrieve them using less critical vulnerabilities. But can we do better?

AWS Secrets and KMS

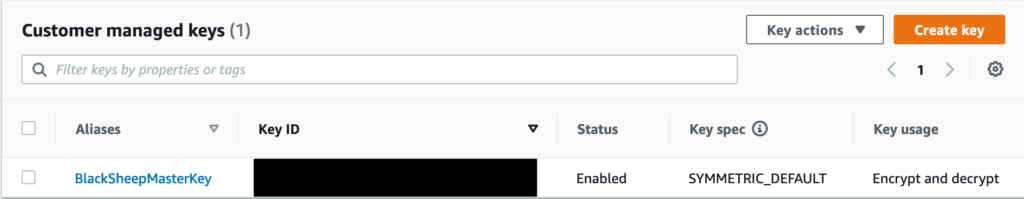

We could think of encrypting the secrets so that gaining access to them does not mean that they’ll be compromised. In order to achieve this, we need an encryption key, which is provided by a Key Management Service.The cool feature of this service is that we can restrict access to it so that during encryption/decryption process we do not have any direct access to the key, so after the deployment of our application everything remains within AWS infrastructure

Once we’ve created the key, we can use it for data encryption.

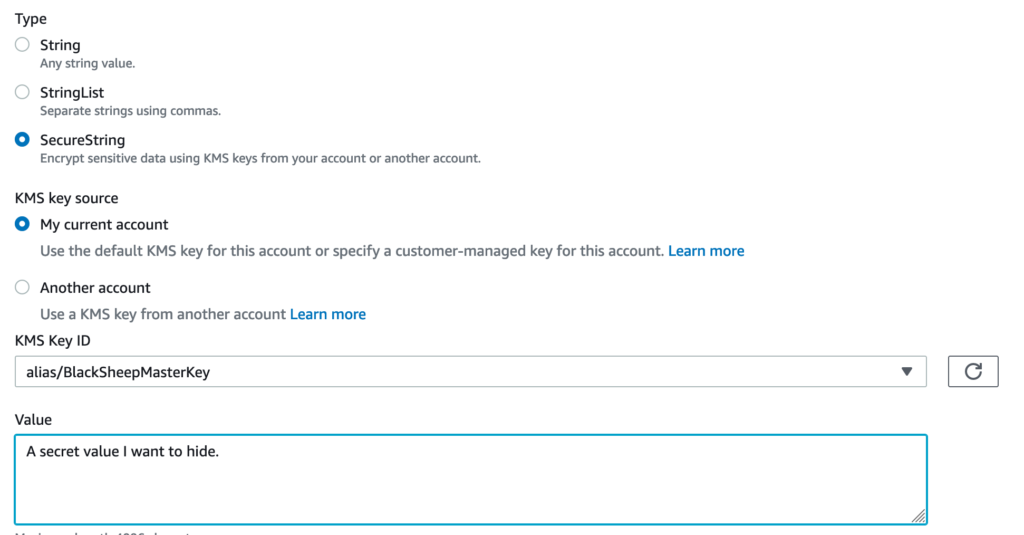

AWS Secrets and SSM Parameter Store

In the AWS Systems Manager, we can find something called Parameter Store. This is a service that provides us with a centralized management of parameters, not necessarily encrypted ones. This means that for example we can store parameters for the different task definitions in ECS in a single place and update them once instead of clicking through each and every service.

After choosing a Secure String option, we can encrypt data with KMS.

And browse it in the menu.

AWS Secrets With Django

Once we have the infrastructure set up properly, we can make a few adjustments in our code in order to unleash the power of AWS. We need to retrieve those values directly into our application. In order to do so we need to make a few adjustments. We create a retriever that is responsible for collecting the secrets from the AWS. Thankfully it’s as easy as creating a proper Boto3 client:

class SSMSecretsRetriever(BaseSecretsRetriever):

def __init__(self):

region_name = "eu-central-1"

self._common_prefix = "/BlackSheepLearns/dev/"

# Create a Secrets Manager client

session = boto3.session.Session()

self._ssm_client = session.client(service_name="ssm", region_name=region_name)

def retrieve(self, name: str) -> str:

return self._ssm_client.get_parameter(Name=self._common_prefix + name, WithDecryption=True)[

"Parameter"

]["Value"]

I’m not perfectly happy with this code. At the time of writing this article the PR I’ve created on GitHub has a few notes for myself in order to improve it, but the core will remain as it is. Let’s go through it.

Firs, we create a few helper variables like prefix that is used for all the parameters. With SSM we can have a different prefix for different environments. Mine is currently hardcoded. Next we ask boto (the AWS SDK for Python) to create an SSM client for us. In the retrieve() method we call this service with the prefix defined earlier followed by the concrete key name we want to retrieve. You can notice the WithDecryption=True value. It tells SSM to use the KMS to decrypt the key first. This is fine, we don’t want the direct to the key we use for encryption and decryption process. Last we return the desired value.

That’s it. No black magic. The only thing you need to pay attention to is that the quirks of AWS. In order to focus on the high-level concepts I’ve skipped a few topics like the detailed description of what ECS is (it’s enough for you to know that it allows to orchestrate Docker containers without using Swarm or Kubernetes) or the adjustments needed for IAM.

Stay tuned by subscribing to the newsletter, star the project on GitHub and have happy hacking.